Enterprise architects face a familiar problem. Vendors claim their "intelligent" agents handle everything from invoice routing to product design, while others insist rules engines remain the safest bet. Each option works brilliantly in specific situations, but choosing between them requires understanding fundamental architectural differences.

Traditional automation delivers proven results for predictable, structured tasks. Rules engines execute identical "if-then" logic millions of times without variation. However, traditional automation breaks when inputs change. Even minor field name updates require manual code rewrites. Agentic AI operates differently. AI agents perceive context, reason toward goals, and choose their own paths. That flexibility creates new risks: non-deterministic outputs, GPU budgets, and governance headaches.

This guide provides a decision framework mapping workflow complexity, data variability, and integration breadth to the right technology. You'll understand when rules engines still pay dividends and where autonomous intelligence transforms data workflows without rewriting your entire stack.

Quick Comparison

Traditional Automation:

- Follows predetermined rules and logic

- Executes identical steps every time

- Works best with structured, predictable data

- Requires manual updates when processes change

AI Agents:

- Reason through context and make decisions

- Adapt to changes in data formats and requirements

- Handle unstructured inputs like emails and PDFs

- Learn and improve from outcomes

How Traditional Automation and AI Agents Differ

Traditional automation runs on hard-coded if-then logic with linear execution paths. Each component executes exactly what developers coded. Because rules are explicit, behavior stays transparent and auditable.

AI agents perceive current context, reason toward goals, plan optimal actions, execute those actions, then learn from outcomes. This continuous cycle keeps agents effective when data formats change, business rules evolve, or edge cases emerge. The shift from rigid rules to autonomous behavior defines the core difference.

The technical stacks reflect these differences. Traditional automation uses straightforward scripting or RPA tools with relational databases. AI agents require large language models for reasoning, tool interfaces for actions, vector stores for persistent memory, and feedback mechanisms that score outcomes.

Traditional automation excels with structured, stable workflows like invoice routing by cost center codes or nightly ETL into reporting databases. Agents excel when workflows require contextual understanding and adaptation.

Data Handling and Integration Architecture

Understanding how each system processes data and integrates with your existing infrastructure reveals why they succeed in different scenarios.

Traditional automation data handling: Rule-based systems work best with predictable data structures. CSV exports, database tables, API responses with consistent schemas, these are their strength. When your daily invoice batch arrives as a 500-row spreadsheet with columns in the same order every time, traditional scripts excel.

The logic is straightforward: "if column C equals 'Approved', route to accounting system." Scripts and RPA bots move data from point A to point B through API connectors and webhooks. This simplicity keeps projects fast but creates tight dependencies. A minor UI change breaks the flow. You must re-record bots or rewrite code.

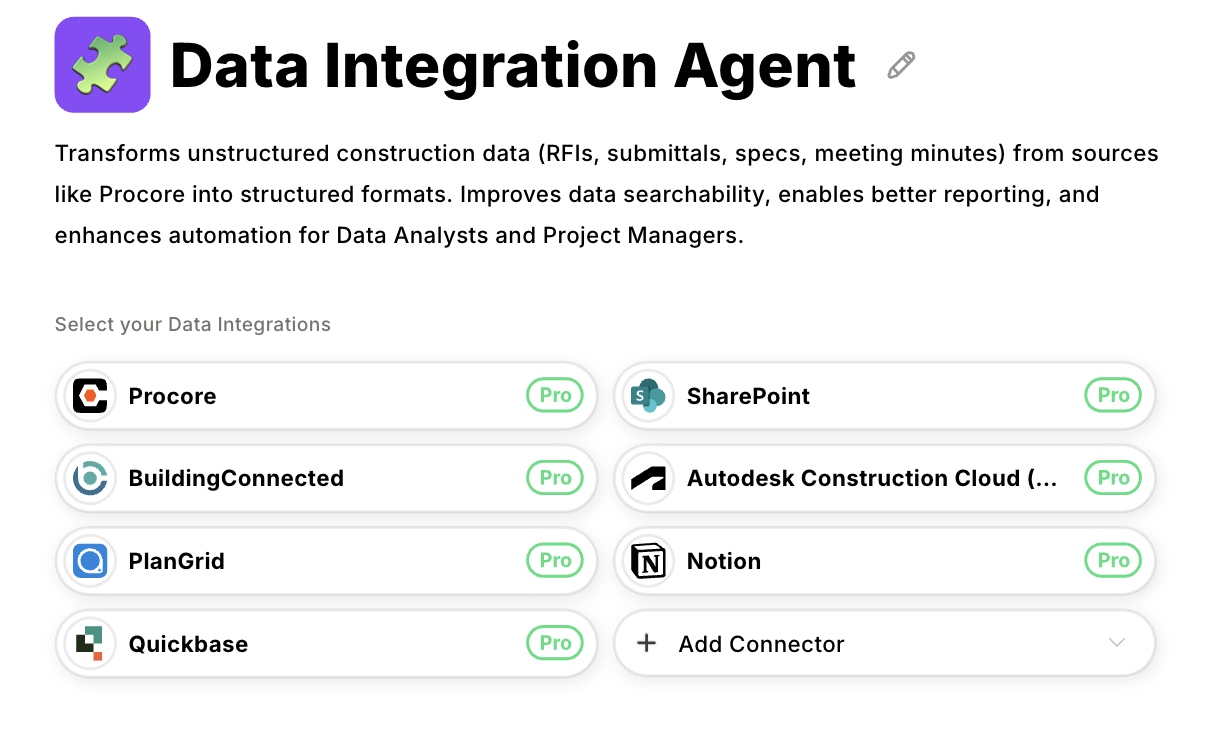

AI agent data handling: Agents handle data chaos that would paralyze traditional automation. A single agent processes email threads containing purchase orders as PDF attachments. It extracts line items using OCR. It validates vendor information through API lookups. It updates your ERP system all while maintaining context about previous conversations with the same vendor.

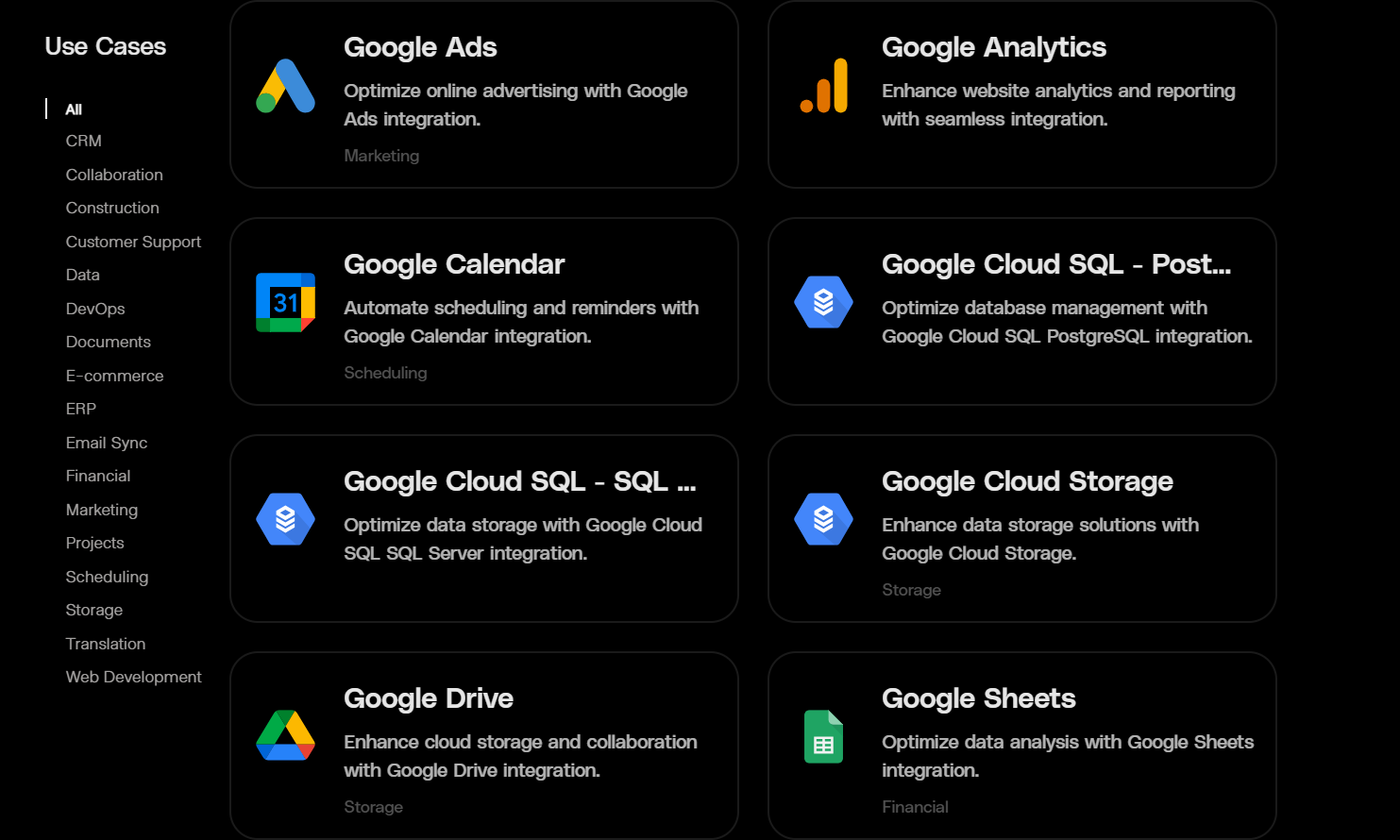

This demands a different pipeline. One that processes raw, unstructured data, creates searchable representations, stores them for quick retrieval, and makes this information available to the agent during execution. Platforms built for deterministic rules rarely include these capabilities. Datagrid provides this unified integration layer, connecting AI agents to 100+ data sources through a single platform designed for both structured and unstructured data workflows.

Traditional automation memory: Traditional automation treats each execution as isolated. Once the script completes, all context disappears. If your customer service workflow needs to reference last month's complaint history, you manually code database queries and state persistence.

AI agent memory: Agents maintain contextual memory through vector databases that automatically retrieve relevant information. The same agent that processed a vendor inquiry yesterday can continue that conversation today without losing context. This persistent memory enables agents to build understanding over time. This shift from stateless operations to contextual memory defines the architectural leap between paradigms.

Error Handling and Testing Requirements

How each system responds to errors and what testing they require differs significantly.

Traditional automation error handling: When traditional automation encounters unexpected data (e.g., a missing field, wrong data type, or API timeout) it typically fails hard. It requires human intervention to restart. Errors are binary where the process either succeeds or fails completely.

AI agent error handling: Agents evaluate confidence levels continuously. If certainty drops below thresholds, they automatically request clarification. They attempt alternative processing paths. They escalate with detailed context about what succeeded and what failed. This graceful degradation prevents total workflow failure.

Traditional automation testing: Traditional workflows use standard unit tests and deterministic logging. If a test passes, the workflow will behave identically in production. Regression testing is straightforward because outputs never vary for identical inputs.

AI agent testing: Agent-based systems require quality checks that measure output accuracy against reference datasets. They need prompt versioning to track how instructions evolve. They need monitoring tools that trace decision-making paths. You need to understand not just what the agent did, but why it chose one action over alternatives. This additional complexity ensures agents make reliable decisions across varying inputs.

Selecting the Right Approach

This framework helps you evaluate workflows based on three key factors: complexity, data variability, and integration requirements. Use these criteria to determine which technology fits your specific needs.

When Does Workflow Complexity Favor Traditional Automation?

Traditional automation excels when the path is linear. "If invoice status = approved, then send to ERP" represents ideal traditional automation. Its rule engine executes millions of identical transactions without variation. You get full auditability for compliance-heavy tasks. Teams processing stable, structured records see predictable ROI because the logic rarely changes. High-volume activities like form validation or ETL still run comfortably on conventional systems.

Key question: Does the process break whenever input formatting changes? If no, traditional automation likely works well.

When Does Workflow Complexity Require AI Agents?

The moment workflows involve reasoning through multiple variables, agents provide better outcomes. Manual document processing can take significant time as teams extract requirements, cross-reference past proposals, and generate responses. These workflows break traditional automation. The data is unstructured. The context varies with every case. The decision-making requires reasoning through multiple variables. AI agents handle this complexity naturally.

Key questions:

- Do users supply context in natural language you currently ignore?

- Are exceptions surfacing weekly despite extensive rule coverage?

- Could the business benefit from decisions that improve automatically over time?

If you answer yes to these questions, agents make sense.

How Does Data Variability Affect Your Choice?

Data variability determines which approach handles your inputs effectively.

Structured data favors traditional automation: When inputs arrive in consistent formats (e.g., CSV files with fixed columns, API responses with stable schemas), hard-coded scripts perform efficiently. Processing costs stay low. Maintenance requirements remain minimal. The system executes predictably.

Unstructured data requires AI agents: The moment unstructured inputs arrive like emails, PDFs, and chat transcripts, hard-coded bots break. Updating rules for every nuance increases maintenance costs exponentially. This plateau hits many RPA programs after year one. Agentic AI turns that curve around. An AI agent can read a free-form support ticket, infer intent, and plan next steps. It combines reasoning with continuous learning. If your backlog includes document triage, sentiment-aware customer outreach, or exception-ridden insurance claims, agents deliver better results.

Consider email triage in customer support. Traditional automation routes by keywords, while AI agents read full messages, cross-reference CRM history, and draft personalized responses, accessing complete customer context that keyword matching can't provide.

How Many System Integrations Can Each Approach Handle?

Integration breadth measures how many APIs, databases, and SaaS tools your automation must coordinate.

Few integrations work with traditional automation: A handful of endpoints fits scripted workflows well. Point-to-point connections are straightforward to build and maintain. When you connect three to five systems with stable interfaces, traditional automation delivers reliable results.

Many integrations favor AI agents: As soon as you juggle dozens of systems, agents pay off. Their contextual memory lets them coordinate cross-platform actions. They adapt when systems change without requiring code updates for each field modification. Enterprises blending legacy ERPs with modern SaaS are already layering agents on top of existing bots to handle edge cases. This hybrid pattern boosts long-term ROI in manufacturing rollouts.

Key question: Will the automation need to pull or push data across more than five systems? If yes, agents' contextual memory becomes valuable.

Integration and Infrastructure Considerations

Infrastructure Requirements

Traditional automation runs on standard compute with relational databases. Agent workflows require vector databases for semantic memory, GPU resources for inference, and telemetry systems that correlate prompts and API calls. If workflows need contextual memory beyond what you explicitly provide, your architecture must support this from day one.

Security and Access Control

Rules engines receive exact fields they need. Agents benefit from broad context (e.g., conversation history, customer notes, compliance policies). Every new data source increases the potential exposure of sensitive information. Roll integrations out incrementally. Start by granting read-only access, let the agent prove it can parse accurately, then graduate to write permissions behind approval gates.

Scaling Economics

Traditional automation scales linearly, doubles your processes, and roughly doubles your costs. Every new process needs another bot or script. AI agents scale differently. One agent platform handles dozens of workflows, sharing reasoning and memory without duplicating infrastructure. Organizations moving from large bot fleets to unified agent platforms often see significant reductions in automation costs while handling substantially more processes.

Building Hybrid Approaches

You don't need to choose exclusively between traditional automation and AI agents. Most enterprises use both. Datagrid supports this hybrid model, allowing you to deploy rule-based workflows for predictable tasks while leveraging specialized AI agents for document processing, data enrichment, and complex decision-making, all through one platform.

A common progression moves through four stages: scripted task, orchestrated workflow, agent-in-the-loop, and fully autonomous agent. For example, invoice processing might start with rules sorting invoices by vendor, add RPA for PDF extraction and ERP updates, introduce an AI agent to handle unstructured email text with human approval, then move to full autonomy with audit oversight.

So then, what stays rule-based versus what moves to agents? Stable data tasks like user provisioning remain scripts. Language-heavy, exception-filled processes (e.g., customer communications, contract analysis, document classification) move to agents. Keep deterministic rules for compliance-critical functions like PII masking and approval thresholds. Let agents operate inside those constraints.

Build the Right Automation Architecture with Datagrid

Datagrid eliminates the forced choice between traditional automation and AI agents by providing the infrastructure to support both:

- Unified data integration for any automation approach: Connect to 100+ business systems through a single platform, whether you're building rule-based workflows for structured data or deploying AI agents that process emails, PDFs, and unstructured content. Your architecture supports both without managing separate integration layers.

- Specialized AI agents that handle real complexity: Deploy purpose-built agents for document processing, data enrichment, and workflow automation that adapt to changing requirements. These agents maintain contextual memory across interactions while operating within the compliance guardrails your deterministic systems require.

- Hybrid deployment that scales with your needs: Start with rule-based automation for stable workflows and introduce AI agents where data variability demands intelligent decision-making. The platform grows with your architecture as you progress from scripted tasks to autonomous agents.

Get started with Datagrid to build automation architecture that combines the reliability of traditional systems with the adaptability of AI agents.