Your BD manager knows exactly why you lost three aerospace RFPs this quarter: the engineering team couldn't answer questions about quality certifications fast enough, pricing came in competitive but technical credibility faltered during evaluation, and competitors demonstrated stronger integration capabilities with existing production systems.

That intelligence lives in her head. It didn't inform the RFP your team submitted last month. And when she moves to a different role or a different company, those lessons walk out the door.

Manufacturing proposal teams lose deals for predictable reasons. Pricing misalignment, technical capability gaps that surface too late, and competitive positioning that misses what actually matters to procurement committees all contribute to lost opportunities.

The patterns exist across your won and lost bids, but without systematic capture, each proposal starts from scratch. Sellers and buyers often perceive lost deals differently, a fundamental perception gap that undermines root cause identification without structured win/loss analysis.

Why Manufacturing Proposals Fail

Your sales team believes pricing killed the deal. But the buyer's engineering team may have eliminated you during technical evaluation because your proposal didn't demonstrate integration with their existing production systems.

In manufacturing contexts, this perception gap becomes acute where technical complexity masks true decision factors. Several core issues contribute to proposal failures:

- Mislabeled objections. Production capacity concerns get labeled as "pricing objections." Quality system compatibility issues surface as "timeline delays."

- Siloed technical knowledge. Your applications engineer knows exactly which quality certifications matter for aerospace customers, but that expertise never reaches the proposal team responding to an aerospace RFP.

- Inaccessible historical data. Your pricing analyst has data showing that bundled service agreements win more often in capital equipment deals, but that pattern sits in a spreadsheet nobody else can access.

- Inconsistent capability claims. Each proposal team reinvents the wheel, making inconsistent claims about capabilities and missing opportunities to apply lessons from similar deals won or lost months earlier.

- Disconnected proposal development. When one team member understands buyer objections while another drafts technical specifications, the resulting proposal contains disconnects that evaluators notice.

The knowledge exists, but it's trapped in individual minds, departmental systems, and disconnected documents.

Organizations taking a rigorous approach to win/loss analysis often see meaningful improvements in win rates, with gains becoming visible within a few proposal cycles of implementing structured programs. Yet most manufacturing companies treat proposal outcomes as isolated events rather than systematic learning opportunities.

Build a Systematic Capture Framework

Effective win/loss programs require structured data collection across five interconnected categories that inform future RFP strategy and capability investments. Cross-functional teams must coordinate on data collection. Engineering, sales, pricing, and operations each hold pieces of the intelligence puzzle. That intelligence only becomes actionable when combined systematically.

Track Technical Capability and Requirements Data

Document how your technical proposals performed against evaluation criteria. Track which engineering specifications resonated with buyers, where technical specifications fell short, and how competitors positioned capabilities differently.

Track technical capability data across four critical areas:

- Quality of technical pre-sales support and responsiveness during evaluation

- Sales engineering effectiveness and proof-of-concept results

- Production requirements, capacity constraints, and quality system interfaces

- Regulatory compliance specifications appearing as critical evaluation criteria

Manual analysis of this data reveals patterns over time. Which quality certifications appear most frequently in won versus lost bids? How do technical specification formats correlate with evaluation scores? Where do competitors consistently outperform your technical positioning?

Tracking these patterns across multiple proposal cycles identifies capability gaps and specification approaches that drive buying decisions.

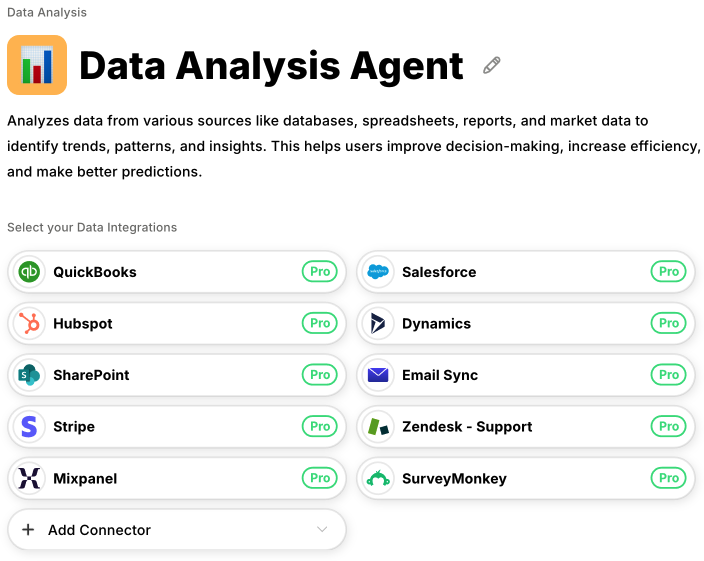

Datagrid's Data Analysis Agent analyzes historical proposal data to identify which technical specifications and pricing structures correlate with wins, automatically surfacing these patterns when similar RFPs arrive. This transforms scattered win/loss data into qualification guidance that informs your next bid.

Capture Competitive Intelligence and Pricing Data

Companies should implement systematic workflows for competitive price-to-win analysis. Track competitor pricing estimates against actual bid prices to calibrate pricing accuracy over time. Document which technical capabilities differentiated winning proposals and how competitors positioned unique manufacturing technologies or certifications.

Map Customer Decision Criteria

Map both stated and unstated decision factors through post-decision buyer interviews conducted 2-4 weeks after final decisions. Beyond published evaluation criteria, track which production efficiency needs or operational challenges truly drove selection decisions.

Measure Workflow Efficiency Metrics

Document proposal development time by section, subject matter expert availability, and content reuse patterns. Teams using systematic proposal management approaches achieve improved win rates and can submit more proposals annually than teams without systematic workflows.

Understanding intelligent automation fundamentals helps teams identify which proposal activities benefit most from AI assistance.

Structure Competitive Intelligence Extraction

Manufacturing organizations should track competitive intelligence across multiple streams before specific opportunities arise.

Identify Proactive Collection Sources

Monitor competitive intelligence from multiple sources before opportunities arrive:

- Competitor job advertisements revealing capability expansion plans 6-12 months before public announcements

- Corporate sources (e.g., websites, annual reports, press releases)

- Market-facing sources (e.g., product documentation, conference presentations, trade press)

- Opportunity-specific analysis from past encounters

Your competitive intelligence sits scattered across email threads, proposal archives, and market communications. The applications engineer remembers a competitor's quality certification announcement from six months ago. The pricing analyst has notes on typical competitor bundling strategies. The BD manager recalls specific positioning language from lost opportunities. This intelligence needs systematic capture in a searchable knowledge base before it's needed for active pursuits.

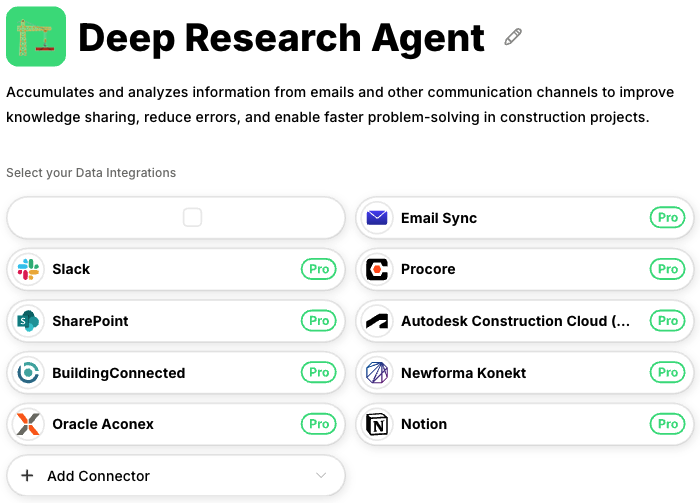

Datagrid's Deep Research Agent accumulates competitive intelligence from emails, proposal archives, and market communications, building a searchable knowledge base of competitor positioning and capabilities. This prevents the intelligence loss that occurs when BD managers transition to new roles and their accumulated competitive insights disappear.

Conduct Opportunity-Specific Analysis

Align competitive understanding to each specific bid by tracking competitor proposal positioning, pricing structures, and solution configurations. When customer evaluation feedback becomes available, capture it systematically rather than letting it dissipate into individual memory.

Apply an Evaluation Matrix for Comparative Analysis

Strategic assessment frameworks evaluate proposals across multiple criteria (e.g., ease of evaluation, clarity of presentation, needs alignment, solution transparency, professional presentation, relevant differentiators, and content optimization). This enables quantitative "Us vs. Best" assessment, identifying specific gaps like "competitors score 8/10 on professional presentation while we score 5/10," allowing organizations to prioritize improvements that close competitive vulnerabilities.

Establish Workflow and Responsibilities

Win/loss programs fail when nobody owns execution. Without clear accountability for conducting interviews, synthesizing findings, and distributing insights, even well-designed capture frameworks produce sporadic data that never reaches the teams who need it.

Assign specific roles for interview scheduling, feedback documentation, and stakeholder reporting to ensure consistency across every proposal outcome.

Time Your Interviews Strategically

The optimal window for win/loss interviews is 2-4 weeks after final decision announcement, when evaluation details remain fresh but emotions have settled. For manufacturing contexts, conduct interviews 2-4 weeks post-decision for losses and 3-4 weeks after contract signing for wins.

Use Third-Party Interviewers for Candid Feedback

Third-party interviewers often achieve more candid feedback than internal teams. Buyers share honest feedback more readily when they don't worry about damaging ongoing relationships. Deploy third-party interviewers for high-value strategic accounts and competitive losses. Use trained internal teams (never the account sales representative) for standard deal flow.

Distribute Knowledge by Stakeholder Needs

Democratized win/loss programs support better outcomes across the organization. Tailor distribution to stakeholder needs:

- Sales teams. Monthly competitive intelligence summaries with actionable objection responses, including specific talk tracks for common buyer concerns

- Product/engineering teams. Quarterly feature gap analysis informing R&D priorities, with ranked lists of capabilities competitors offered that you lacked

- Pricing teams. Quarterly pricing perception analysis across won and lost deals, including win rate correlations by pricing structure

- Executive leadership. Quarterly strategic insights for market positioning decisions, with trend analysis across multiple proposal cycles

Automate the Capture and Analysis Workflow

Manual win/loss programs deliver measurable ROI but don't scale. Your BD team can't interview every buyer or cross-reference pricing decisions across hundreds of historical bids.

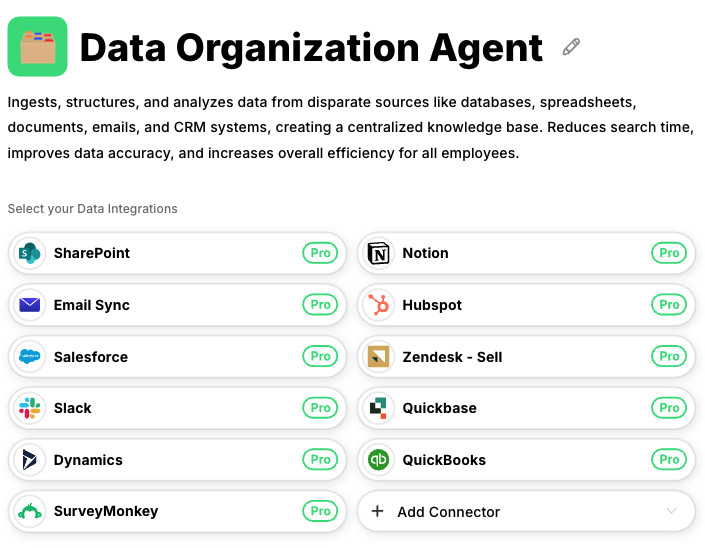

Datagrid's Data Organization Agent can ingest data from CRM systems, proposal management platforms, and historical win/loss interviews, creating a centralized knowledge base that surfaces relevant context during active RFP pursuits.

When a new aerospace RFP arrives, the agent can automatically surface lessons from similar deals, identifying which technical approaches won, which pricing structures failed, and what competitive positioning resonated. This helps prevent institutional knowledge loss when experienced team members transition to new roles. Teams that connect their CRM with collaboration platforms can further streamline how proposal intelligence flows across departments.

The practical approach involves implementing structured manual workflows for win/loss interviews while deploying Datagrid's Data Organization Agent to capture and surface intelligence across your proposal ecosystem. Organizations can also streamline proposal development by automating repetitive document generation tasks.

Turn Individual Expertise into Institutional Intelligence

The challenge isn't knowing what works. It's ensuring that systematic analysis of won and lost proposals informs every pursuit consistently.

Systematic win/loss analysis builds the intelligence foundation. Manufacturing companies seeing measurable win rate improvements build systematic capture workflows. They start with structured capture of buyer decision data through post-deal interviews, progress to pattern recognition across proposal cycles, and evolve toward predictive qualification guidance. Implementing automated document workflows ensures that proposal materials remain organized and accessible across project teams.

Your best BD manager's insights shouldn't retire when she does. Systematize win/loss analysis through structured interviews, competitive intelligence capture, and cross-functional knowledge distribution.

Leveraging AI for trend detection helps identify winning patterns faster, while automated data summarization reduces time spent manually reviewing past proposals. Organizations that democratizethese insights across sales, product, and pricing functions will achieve meaningful performance improvements and better win rates.

Automate Win/Loss Analysis with Datagrid

Datagrid's AI agents can help manufacturing proposal teams capture, organize, and apply win/loss intelligence systematically:

- Historical proposal analysis: The Data Analysis Agent can identify which technical specifications and pricing structures correlate with wins, surfacing these patterns automatically when similar RFPs arrive.

- Competitive intelligence capture: The Deep Research Agent can accumulate competitor positioning from emails, proposal archives, and market communications, building a searchable knowledge base that persists beyond individual team members.

- Centralized knowledge base: The Data Organization Agent can ingest data from CRM systems, proposal platforms, and interview records, creating a unified repository that surfaces relevant context during active pursuits.

- Pattern recognition across cycles: AI agents can cross-reference pricing decisions, technical approaches, and competitive positioning across hundreds of historical bids to identify what drives buying decisions.

- Institutional knowledge preservation: Captured insights remain accessible when experienced BD managers transition to new roles, scaling your best practices across the entire team.

Create a free Datagrid account to start transforming scattered win/loss data into qualification guidance that informs every proposal.