AI proof-of-concepts stall the moment they need enterprise data. Siloed CRMs, ERPs, and cloud applications block agents from accessing the context they need to function effectively. Security teams add weeks of audit requirements while scalability concerns emerge as soon as usage increases. Projects designed for quick wins turn into quarter-long integration efforts.

Enterprise AI integration challenges are the technical and organizational obstacles that prevent companies from connecting AI agents to production data systems. These challenges include fragmented data access, security and governance requirements, and scaling complexity.

Fragmented data integration is a significant factor in missed AI deployment deadlines and project cancellations, driving up costs and damaging stakeholder confidence. Companies that solve data connectivity quickly convert experiments into revenue-generating systems, creating competitive advantages in enterprise AI adoption.

This approach addresses three blockers that repeatedly derail enterprise AI, including data silos, security bottlenecks, and scaling from pilot to production. We'll explore each obstacle in depth, then provide an actionable framework for overcoming these challenges through disciplined scoping, secure connections, and measurable outcomes.

Challenge 1: Data Access Across Disconnected Systems

Data access challenges occur when enterprise information is scattered across disconnected systems. Marketing keeps leads in HubSpot, finance guards numbers in an on-prem Oracle instance, and operations logs projects in a niche SaaS app, with no shared view.

Data Fragmentation Blocks AI Model Training

Siloed CRMs, ERPs, and home-grown databases force you to work with incomplete, outdated information daily. Data teams stitch these sources together with ad-hoc CSV exports, introducing typos, timestamp mismatches, and missing columns that require hours of cleanup and erode trust in results.

This fragmentation creates a cascade of problems for enterprise AI adoption. First, data silos produce incomplete datasets. Second, incomplete datasets cause models to train on partial or noisy information. Third, poorly trained models deliver wrong forecasts and biased recommendations. As a result, AI projects derail before they launch.

Legacy systems expose decades-old flat-file interfaces or proprietary protocols, forcing teams to build brittle, one-off scripts that break whenever vendors issue patches. Meanwhile, inconsistent schemas and duplicate records slip through manual gates, undermining data quality and forcing endless re-training cycles.

Unified Data Access Eliminates Custom Integration

Break this cycle by introducing a unified data layer, an integration platform that captures changes as they happen. Start by inventorying every source, no matter how obscure, then rank each by business value. Deploy standardized connectors that translate native formats into a consistent structure. These connectors process data before it reaches your data lake or storage system, eliminating the need for custom transformation code.

Pre-built connectors eliminate weeks of custom code and move you straight to validation. Run automated profiling to flag null spikes, schema drift, or duplicate keys and fix issues at the edge instead of in your model notebook. The result is a single, governed feed that analytics teams can query in minutes rather than days, dramatically shrinking cycle times.

Define clear APIs (Application Programming Interfaces) so producers know exactly which fields (customerid, closedate, invoice_total) downstream models expect. Version those interfaces so breaking changes surface fast and trigger automated tests. Create service accounts with minimal required permissions instead of reusing personal credentials, satisfying audit teams worried about uncontrolled data exports. Resist patching gaps with bash scripts; every shortcut becomes technical debt tomorrow.

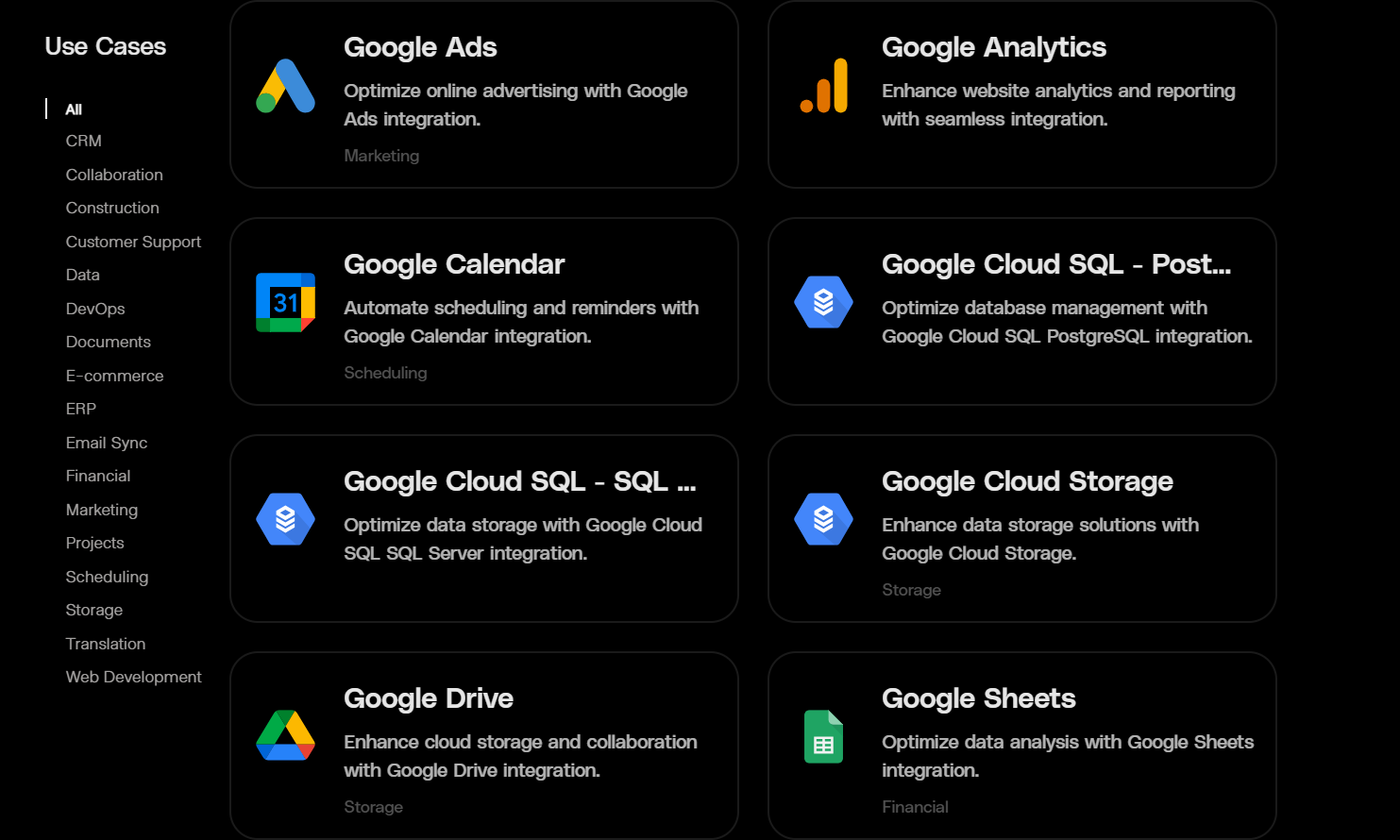

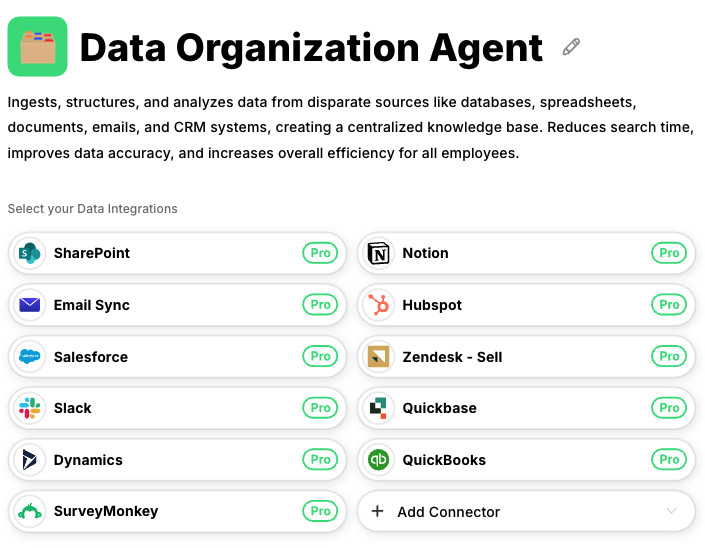

Platforms like Datagrid provide this unified data layer with pre-built connectors to over 100 business systems, eliminating the months typically spent building custom integrations.

AI agents access information from CRM, ERP, and document systems through standardized interfaces that handle authentication and schema translation automatically.

Three failures cause most system disruptions:

- Authentication errors when expired tokens or rotated passwords block connectors

- Schema drift after upstream teams add columns or rename fields

- Latency spikes that snowball into timeouts during peak loads

How to recover from connector failures:

- Authentication errors: Re-issue credentials and retest the connector. Validate token expiry settings and update credential rotation schedules.

- Schema drift: Compare current payloads to expected structure. Hot-fix field mappings or roll back changes to restore compatibility.

- Latency spikes: Trace network metrics to identify bottlenecks. Reroute traffic to standby regions and scale buffers to absorb traffic bursts.

Proactive monitors (token expiry alerts, schema change detection, and latency tracking) catch most issues before business users notice.

When clean, connected data arrives automatically, you stop firefighting spreadsheets and start training agents that move revenue, engagement, and cost metrics. That's the foundation every successful enterprise AI adoption requires.

Challenge 2: Security & Governance Requirements

Security and governance requirements are the compliance frameworks and audit processes that organizations must satisfy before deploying AI agents to production. A single misconfigured permission or missing audit trail could trigger fines under GDPR (General Data Protection Regulation) or the coming EU AI Act.

Continuous Authentication Prevents Security Breaches

Security reviews can stretch a one-week rollout into a quarter-long project. Fear of breaches and non-compliance is now a top reason enterprise AI projects stall or get shelved altogether. Recent surveys of global CIOs and CISOs document this pattern, with security concerns cited as a primary deployment blocker. If you can't prove your agent is safe, it never sees production.

The first security hurdle for enterprise AI is classic cybersecurity concerns. AI creates new attack surfaces (model-poisoning, prompt injection, unauthorized data scraping) on top of familiar threats. Verify every agent action through continuous authentication by ensuring every call your agent makes is authenticated, authorized, and continuously verified.

Encrypt traffic in transit with TLS 1.3 (Transport Layer Security 1.3) and protect model artifacts at rest. Security reference architectures outline the baselines. Map identities to service accounts, not shared keys, so you can revoke access instantly if credentials leak.

Automated Compliance Frameworks Accelerate Approvals

Governance demands more. The EU AI Act requires documented risk management, data lineage, and human oversight, while NIST's (National Institute of Standards and Technology) AI RMF (Risk Management Framework) recommends these practices through its voluntary framework.

CISO playbooks recommend convening a cross-functional governance board to oversee AI deployments, with board approval typically required for high-risk or significant new agents rather than every agent. Meanwhile, compliance resources provide practical mappings of control requirements, helping you translate legal text into executable policies.

Here's how to turn these demands into a deployable framework that accelerates enterprise AI adoption:

- Integrate the agent into your existing identity management so roles, not individual tokens, dictate privileges.

- Encode policies as executable rules, using tools like OPA (Open Policy Agent) or Rego policy languages, so approvals shift from documents to automated checks.

- Run a "compliance dry-run": simulate production traffic in staging, capture logs, validate encryption, and export evidence packages for auditors.

- Add data lineage tags at every pipeline hop; lineage tracking underpins incident response when breaches occur.

- Schedule continuous controls monitoring; risk-based approaches keep you ahead of drift.

When internal auditors arrive, being prepared matters more than being perfect. Use this repeatable, five-item Compliance Accelerator checklist to pass on the first try:

- Inventory: list data sources, model endpoints, and third-party APIs (Application Programming Interfaces) with owners

- Access: verify minimal permission roles and regular credential rotation

- Encryption: confirm TLS 1.3 (Transport Layer Security) in transit, AES-256 encryption (Advanced Encryption Standard) at rest, plus key-management ownership

- Lineage: export end-to-end data-flow diagrams and retention policies

- Logging: demonstrate tamper-proof logs with regular alert reviews

Governance shouldn't kill innovation. By automating evidence collection and enforcing controls in code, you give security teams the transparency they need while keeping your agents moving from pilot to production in days, not quarters.

Challenge 3: Scaling & Maintaining AI Agent Deployments

Pilot agents handle single workflows beautifully. Production deployments multiply that complexity across dozens of agents, each requiring container images, secrets management, and separate cloud billing.

Agent Sprawl Creates Operational Chaos

Agent sprawl is the uncontrolled proliferation of AI agents that consume budgets faster than they deliver business value. Without proper architecture, teams face agent sprawl that creates operational chaos.

Large enterprises report that poorly governed agents create three operational problems. First, agents duplicate processing across systems. Second, agents fail when APIs change unexpectedly. Third, poorly managed agents generate more support tickets than productivity gains, according to studies on scaling and orchestration pitfalls.

Management overhead escalates when every new agent needs identity provisioning, secrets rotation, and version tracking. Teams deploying hundreds of agents struggle to audit actions or trace decisions through complex workflows, creating governance gaps that McKinsey identifies as core enterprise risk. Cloud costs spike unpredictably as concurrency bursts, duplicate context windows, and idle containers transform modest POCs into expensive infrastructure bills.

Many projects stall at enterprise rollout because legacy systems can't sustain agent traffic loads.

Controlled Scaling Eliminates Management Overhead

Controlled scaling requires treating each agent as an independent service with clearly defined data contracts. Containers provide repeatable builds, rapid rollbacks, and precise compute resource allocation, following scalability best practices.

Event-driven coordination separates agent management from execution, where message queues buffer traffic spikes while listeners activate agents only when processing new data, reducing idle costs and smoothing rate-limit impacts.

Platforms like Datagrid handle this scaling complexity through automated infrastructure management and resource allocation. Teams deploy agents without configuring infrastructure or managing cloud resources, converting pilot successes into production deployments in days rather than months.

Maintenance automation prevents configuration inconsistencies that undermine enterprise AI adoption. Automated testing systems run checks against staging environments whenever agents update prompts, dependencies, or data schemas.

Centralized monitoring tools track each request from start to finish, eliminating the need to check multiple dashboards during incident response. Cost monitoring with automated thresholds prevents silent budget expansion; alerts trigger when token usage or compute time exceeds predefined limits. Matching agent resources to actual workload demands eliminates wasteful resource consumption, following enterprise playbooks.

Track Performance, Costs, and Reliability Before Issues Impact Users

Track latency, error rates, spending anomalies, and data freshness delays with escalation protocols tailored to system context. Visualize these metrics in the same dashboard monitoring application health. Single-pane visibility shows whether yesterday's scaled agents continue delivering measurable business value today.

Framework for Overcoming Challenges in Enterprise AI Adoption

Now that you understand the three core obstacles (data fragmentation, security friction, and scaling complexity), here's how to address them systematically. You don't need a six-month data program to prove AI agents work. Start with one high-value data source connected to one agent, validating your approach against each challenge area before expanding.

Before connecting an AI agent to production data, validate three readiness foundations. Technical preparation means securing at least one documented endpoint, read/write credentials tied to a service account, and a non-production sandbox where the agent can run safely.

Organizational readiness demands an executive sponsor who can unblock resources and crystal-clear KPIs like "time saved on data entry" or "lead enrichment accuracy." Security requirements include verified minimal permission roles and compliance liaison availability for rapid sign-offs.

Deploy Your First Secure Connection

Identify the most reliable path to your data, whether REST API (Representational State Transfer Application Programming Interface), database view, or maintained SFTP (Secure File Transfer Protocol) folder. Document request patterns, rate limits, and error responses before deployment.

Create a dedicated service account with minimal required permissions, configure secure authentication, and store credentials in your existing vault. If the data source lacks modern authentication, add an API gateway to handle OAuth (Open Authorization) protocols.

Scope ruthlessly by focusing on one data trigger, one automated action, one measurable outcome. The agent might enrich CRM records when sales reps save prospects, or post summaries in Slack when critical support tickets arrive.

Because data flows through your secure connection, you avoid weeks of custom scripting while proving immediate value. Within days, you have an AI agent processing real data automatically, plus a reusable connection pattern for other systems.

The pilot's success provides evidence and organizational support to expand data integration without relitigating feasibility or security concerns, creating a foundation for broader enterprise AI adoption.

Three-Layer Architecture That Eliminates Custom Integration Work

Data teams spend substantial time connecting systems instead of analyzing information. Point-to-point scripts break when schemas change. Nightly data transfers create stale data by morning. Security reviews stall every AI project for weeks because nobody knows which systems actually need access.

Modern enterprise AI integration solves this through three connected layers that let AI agents access information instantly while maintaining security controls.

Start with unified access, a consistent interface that exposes every source through standardized APIs. Instead of building custom connectors for each CRM, ERP, and database, teams query a single logical layer. Companies using this approach substantially reduce new source onboarding time and eliminate duplicate extracts that consume storage and processing power.

Security controls wrap around access through policy-based permissions that follow information wherever it flows. Encryption, access logs, and role-based permissions get enforced automatically at API endpoints. Teams satisfy audit requirements without creating separate "compliant" copies that drift out of sync with production systems.

AI agents operate through event-driven systems that scale horizontally and recover from failures automatically. Agents subscribe to changes rather than polling batch tables, reducing latency and infrastructure costs. When agents fail, new instances spin up and resume processing without manual intervention.

Build, Buy, or Hybrid: Choosing Your Implementation Path

Your implementation approach depends on internal capabilities and timeline requirements:

Build internally: Complete control over every connector and security policy, but your team owns maintenance, compliance updates, and 24/7 operations. Costs escalate as sources and regulatory requirements multiply. Teams should choose the build approach when they need complete control over proprietary systems and have dedicated engineering resources for ongoing maintenance.

Buy a platform: Solutions provide pre-built connectors, event-driven agent engines, and enterprise security controls. Teams can launch pilots substantially faster with vendor-managed maintenance and compliance updates. The buy approach works better for organizations seeking faster deployment with vendor support and standardized integration patterns.

Hybrid approach: Custom code handles proprietary mainframes or strict sovereignty requirements while a vendor platform manages the majority of standard integrations. Common when legacy systems or regulations prevent full cloud adoption. The hybrid approach suits enterprises with mixed requirements, where custom code handles sensitive systems and platform solutions manage standard integrations.

Match architecture patterns to your environment. Cloud-native organizations use fully managed access layers. Hybrid enterprises deploy gateways that proxy on-premises systems into cloud APIs. Air-gapped sectors replicate the same patterns within secure enclaves.

Whatever your constraints, aligning access, security controls, and agent runtime transforms integration from a bottleneck into reliable infrastructure that powers enterprise AI adoption.

Eliminate Integration Delays with Datagrid

Teams building enterprise AI face the same three obstacles: fragmented data access, security bottlenecks, and scaling complexity. Datagrid addresses these challenges through unified data infrastructure built specifically for AI agent deployments:

- Unified data access without custom integration: Connect AI agents to over 100 business systems through pre-built connectors that handle authentication, schema translation, and API versioning automatically. Eliminate months of custom development work that typically blocks AI projects.

- Enterprise security and governance controls: Deploy AI agents with role-based access controls, automated audit logs, and compliance frameworks that satisfy security reviews. Reduce approval cycles from quarters to weeks through automated evidence collection.

- Managed scaling from pilot to production: Convert proof-of-concept successes into production deployments without configuring infrastructure or managing cloud resources. Containerized architecture handles peak loads automatically while preventing agent sprawl and cost overruns. Whether processing invoices, enriching CRM records, or handling PDF documents, agents auto-scale without manual resource management.

- Real-time monitoring and maintenance automation: Track agent performance, costs, and errors through centralized dashboards that surface issues before they impact business workflows. Automated testing catches schema drift and integration failures proactively.

Get started with Datagrid to eliminate processing delays and deploy agents connected to production data in days rather than months.