This article was last updated on December 9, 2025

Picture the end of a quarter. You're juggling half a dozen proposals, each buried in PDFs, Word files, or email threads. Pulling price tables into spreadsheets, chasing finance for discount approvals, and reconciling three "final" versions can swallow hours per deal.

Meanwhile, insights that could tell you why the last deal won or lost sit scattered across CRM attachments and shared drives, invisible when the next opportunity lands. The cost shows up as repeated pricing mistakes, missed differentiators, and leadership blind to patterns hiding in plain sight.

Datagrid's AI agents eliminate that data processing bottleneck.

By connecting to Salesforce, HubSpot, Google Drive, and every document repository you already use, they extract proposal data automatically, surface winning elements through pattern analysis, and free you to focus on strategy instead of copy-paste.

Let's go over how to standardize an evaluation framework, see how AI agents automate side-by-side comparisons, and walk through a pragmatic rollout plan that scales from pilot to full-team adoption.

Standardize Your Proposal Evaluation Framework

Sales teams submit proposals without knowing what separates wins from losses. Evaluation criteria live in different heads, vary by region, and change based on who's reviewing the deal. AI agents can't automate comparison without consistent standards. They'll just accelerate the chaos faster.

The foundation starts with documenting the five elements that consistently differentiate winning proposals from losing ones:

- Pricing structures determine your margin and cash flow through discount levels, payment terms, and contract length.

- Scope definitions specify exactly which deliverables, features, and service levels customers receive, preventing scope creep that kills profitability.

- Timeline commitments set implementation milestones and deadlines that directly impact customer time-to-value and satisfaction scores.

- Terms and conditions cover the liability caps, warranties, and risk allocation clauses that legal and finance teams scrutinize for deal approval.

- Competitive positioning articulates the specific differentiators that convince buyers you solve their problem better than alternatives (the factors that close deals when everything else is equal).

Document each element as a scored criterion with clear ratings. A simple 1-5 scale works when everyone agrees what constitutes a "5" versus a "3."

Apply weights that mirror your strategic priorities (e.g., solution fit 25%, commercial attractiveness 30%, competitive positioning 20%, implementation risk 15%, terms favorability 10%). Weighted evaluation matrices make the scoring transparent and repeatable across deals.

Validation against historical outcomes is crucial. Pull recent won and lost deals, score their proposals using your criteria, and identify which factors consistently appeared in victories.

Standardized scoring reveals patterns immediately. Discount thresholds that preserve margins, timeline promises that ensure successful delivery, and differentiators that close deals become visible. Leadership gains visibility into which levers actually move win rates, and new reps ramp faster because successful strategies are built into the evaluation process.

Most importantly, consistent data gives AI agents reliable inputs to automate, transforming scattered proposal knowledge into scalable competitive advantage.

How AI Agents Automate Proposal Comparison

AI agents automate the manual comparison process (downloading PDFs, copying prices into spreadsheets, hunting through email threads for the latest redlines, and chasing finance for margin checks) at machine speed while preserving the context you need to make sound decisions.

Automated Data Extraction and Normalization

The automation begins the moment a proposal lands, whether it's a 40-page PDF, a Word scope document, or an email quote. An intake agent ingests the file and passes it to a document-processing agent.

Optical character recognition (OCR) and large-language-model (LLM) parsing pull out tables, clause snippets, and timeline commitments, then map them to a standardized schema: total_price, discount_rate, uptime_sla, liability_cap, and so on.

Because every proposal now speaks the same language, a normalization agent can convert currencies, align contract lengths, and translate "per-user per-month" into the common unit your finance team cares about.

Intelligent Scoring and Pattern Recognition

Once the data is structured, comparison becomes instantaneous. A scoring agent applies your weighted criteria (price, scope, risk, timeline) and produces an overall fit score alongside dimension scores.

Simultaneously, a pattern-recognition agent benchmarks the proposal against past wins and losses, spotting non-obvious drivers such as discounts above certain thresholds in specific segments that correlate with margin erosion.

A workflow agent pushes results back into Salesforce or HubSpot, creates tasks for legal when liability caps exceed policy, and notifies the account team in Slack with a plain-language summary.

Eliminating Manual Errors and Surfacing Insights

These automated steps eliminate the core challenges you face today. Manual errors vanish because copy-and-paste is gone.

Evaluation becomes consistent because every deal is judged by the same rubric, not by whoever built the spreadsheet that week.

Insights you'd never catch manually (like a competitor's favorite renewal clause or the SLA combination that boosts win rate) surface in real time, giving you the ammunition to respond to prospects before a rival revises their offer.

Teams tracking structured proposal metrics gain greater clarity into their proposal processes, making it easier to refine strategies for better results.

Real-Time Decision Support

For sales reps, that means spending minutes, not hours, answering a prospect's "What if we add a third-year option?"

For leadership, it means live dashboards that correlate proposal elements with revenue, instead of stale quarter-end post-mortems.

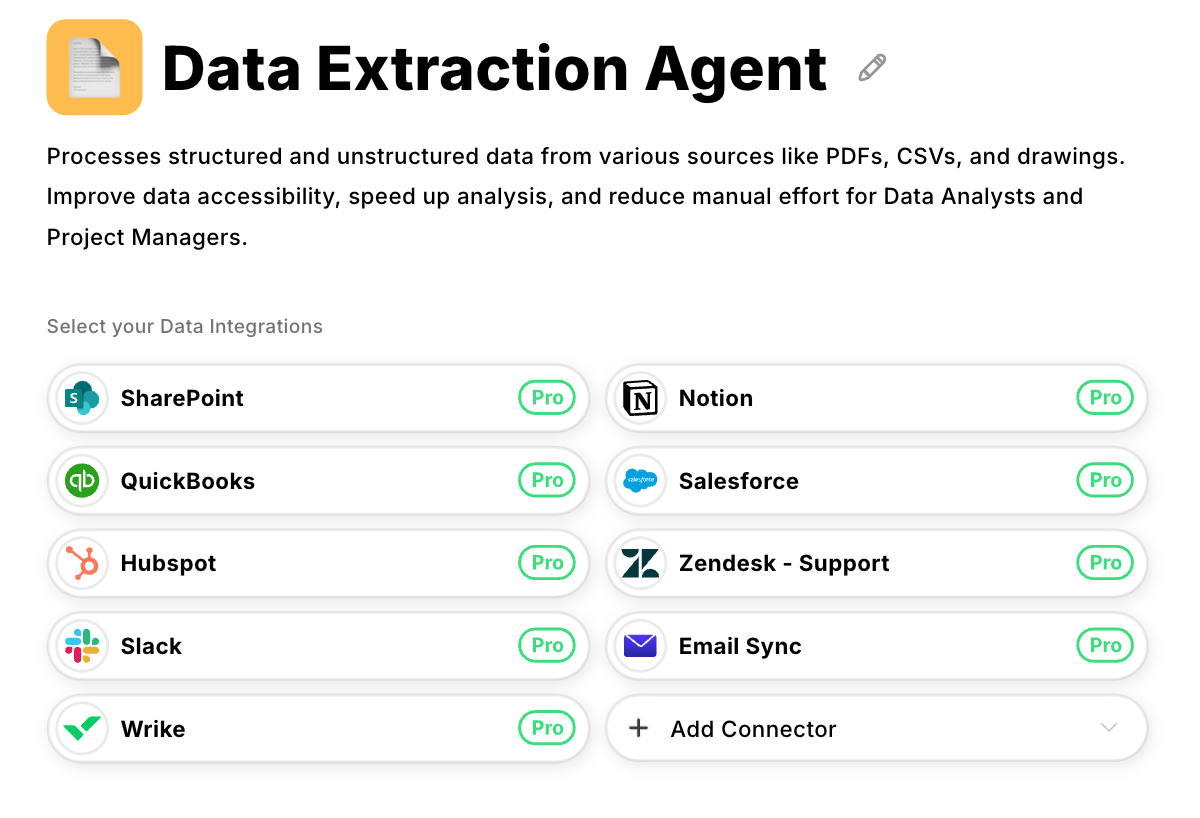

When you run on Datagrid, the Data Extraction Agent can handle a broad range of proposal formats, automatically flag deviations from your best-performing pricing structure, and streamline proposal review before the deal ever leaves draft stage.

You keep the judgment call. The agents clear the fog around it so decisions happen faster, margins stay intact, and your team focuses on winning, not wrangling documents.

Set Up Automated Proposal Comparison

Implementation follows three phases. Structure your criteria and centralize proposal data, validate AI accuracy through controlled testing, then scale across your sales team with performance dashboards. Sales ops can execute this without heavy IT involvement.

Define Criteria and Connect Data Sources

Begin by identifying the five to seven proposal elements that determine wins (price structure, scope, SLA strength, term length, risk clauses, competitive differentiators), then weight them. Working backward from past win/loss data keeps the list focused and forces trade-off clarity; exhaustive checklists dilute focus.

Next, audit where proposals currently live. You'll find PDFs on shared drives, Word files attached to CRM records, redlined contracts in email threads, and spreadsheets in personal folders. Note the formats, owners, and access controls. They become connection requirements for the AI.

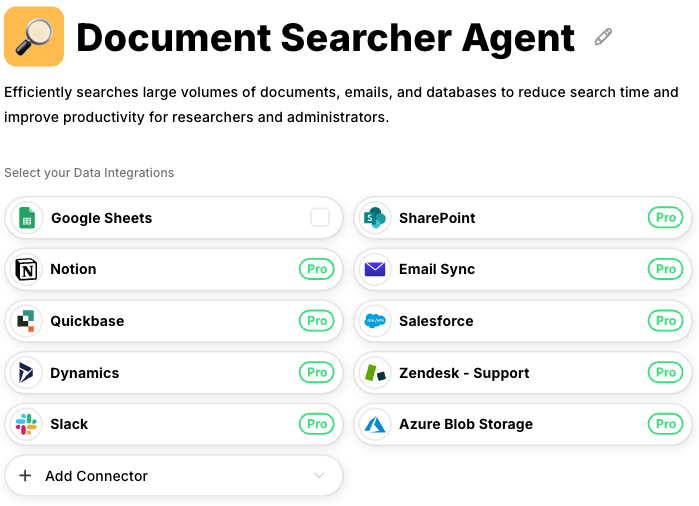

Link each repository to Datagrid securely. Datagrid's Document Searcher Agent connects to Salesforce, HubSpot, Google Drive, Box, and SharePoint through secure access, leaving files in place without moving them.

Tag incoming documents with opportunity IDs, outcomes (won, lost, in-progress), and dates. This metadata ties every extracted clause or price table back to deal results, creating a training set that improves accuracy over time.

Pilot and Validate Accuracy

Choose 30 to 50 recent proposals spanning wins, losses, formats, and contract sizes. Feed them through Datagrid's AI agents (the extraction, normalization, and scoring agents working together) while a human reviewer scores the same documents in parallel.

Inspect extraction accuracy (did the AI capture numbers, clauses, and timelines correctly?), scoring relevance (do high-scoring proposals align with wins?), and edge cases (complex RFP matrices, scanned PDFs, competitor documents).

When mismatches appear, adjust parsing rules or criterion weights and rerun. Log precision and recall for key fields, cycle time saved per deal, and stakeholder confidence scores. A pilot approach rooted in measurable metrics builds trust for broader rollout. Scale once the AI consistently achieves high field accuracy and surfaces meaningful win/loss patterns.

Scale Across Your Sales Team

Lead rollout with pilot numbers (hours reclaimed, faster approvals, higher win rates) and demo live comparisons inside the CRM so sellers avoid new logins. Mandate usage for deals above a threshold, paired with hands-on training. Walk through live opportunities and show how flagged discounts or missing SLAs trigger guided fixes.

Highlighting early champions on team calls and sharing quick-hit success stories counters resistance. Performance dashboards that pull data from Datagrid's AI agents should track proposal turnaround time, discount variance, and win rate uplift so leadership reinforces good behavior.

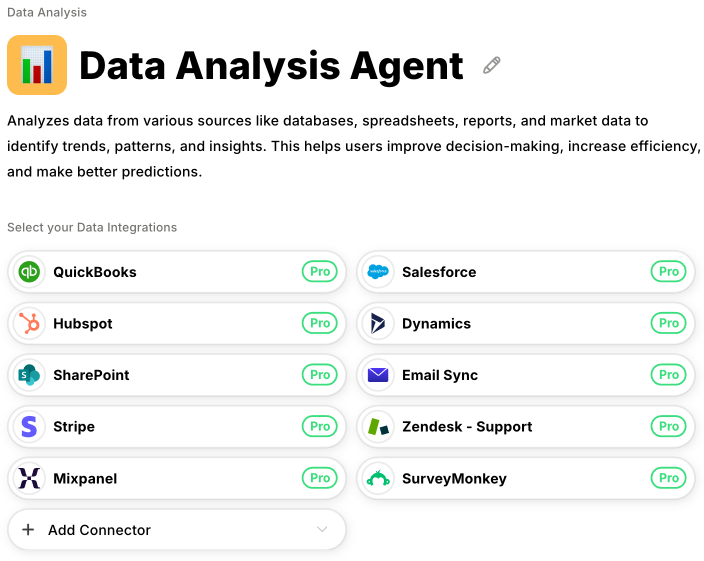

Make continuous improvement habitual. Datagrid's Data Analysis Agent processes every new deal, spotting correlations (e.g., three-year terms with phased payments outperform one-year prepaid contracts) and suggests updated weights or playbook tweaks.

Govern changes through quarterly reviews with sales, finance, and legal so the scoring model evolves with market reality and institutional knowledge compounds when people change roles.

Simplify Proposal Comparison with Datagrid

Datagrid's AI agents eliminate the manual work that buries proposal intelligence in scattered documents, giving Sales Ops the infrastructure to build consistent evaluation processes that scale.

- Extract proposal data without manual entry: The Data Extraction Agent processes PDFs, Word files, and CRM attachments automatically, pulling pricing, terms, scope, and timeline data into structured fields ready for comparison.

- Connect to your existing document repositories: Datagrid integrates with Salesforce, HubSpot, Google Drive, Box, and SharePoint, making years of historical proposals searchable without migrating files or rebuilding folder structures.

- Surface win/loss patterns automatically: The Data Analysis Agent identifies which pricing structures, discount thresholds, and competitive differentiators consistently correlate with closed deals, so you can update playbooks based on evidence rather than intuition.

- Flag deviations before deals leave draft: AI agents compare new proposals against your documented evaluation criteria and alert reps when pricing or terms fall outside the ranges that historically win.

- Build institutional knowledge that compounds: Every proposal processed feeds back into pattern recognition, so the system gets smarter as your team closes more deals and leadership gains visibility into what actually drives win rates.

Create a free Datagrid account to start automating proposal comparison across your sales team.