This article was last updated on December 9, 2025

You open the dashboard and see PDFs, mailroom scans, fax images, and portal uploads crammed into one queue. Before anyone can evaluate coverage, those files must be sorted into CMS-1500 bills, UB-04 claims, first-notice-of-loss sheets, and other templates. The sorting is still manual. Staff print faxes, eyeball layouts, and key batch numbers. Volume spikes from storms or open-enrollment periods break this process completely.

While adjusters wait, SLA clocks keep ticking. Misrouted forms add days to resolution times. Customers start calling for updates. Skilled adjusters burn hours on clerical triage instead of complex claim decisions that actually require their expertise.

This article explains how automated classification eliminates manual sorting bottlenecks, walks through implementation steps, and addresses the governance and change management considerations that determine success.

How Claims Forms Classification Creates Operational Bottlenecks

Document sorting forces your team to make seven critical decisions for every submission: document type, line of business, coverage, cause of loss, financial seriousness, routing owner, and urgency, often within minutes of receipt. When this happens manually, processing bottlenecks start before claims work even begins.

Physical Document Handling

Your intake team spends hours daily opening mail, separating stapled pages, and visually identifying various form types or handwritten flood claims. Faxes and email attachments get the same treatment, with every mis-sorted document traveling to the wrong queue and creating delays that never show up on SLA reports.

Form Recognition and Data Entry

Form recognition and data capture creates the next bottleneck. Staff manually verify template versions, confirming current format specifications, then key policy numbers, diagnosis codes, and loss dates line by line. Double entry helps catch errors, but transposed digits still derail claims and force rework.

Manual Categorization and Routing

With data finally entered, senior staff manually categorize each claim as auto, property, liability, or health and assign it to fast-track or complex queues. These decisions vary by person, shift, and current workload. One adjuster's "urgent" becomes another's "routine," sending identical claims to different queues and creating inconsistent processing times.

Validation and Corrections

Validation creates the final bottleneck. Your team checks for missing signatures and expired codes against payer filing requirements. Each correction triggers phone calls and email threads that turn five-minute checks into multi-day delays.

The Compounding Impact

These manual steps create five operational limits:

- High labor costs from redundant data entry and verification

- Frequent errors from manual keying and subjective decisions

- Inconsistent routing decisions that vary by person and shift

- No surge capacity during catastrophe spikes or enrollment periods

- Poor audit visibility into processing delays and bottlenecks

Manual processing drives longer cycle times, rising processing costs, burned-out adjusters, and frustrated policyholders wondering why simple claims take weeks to move.

How Automated Claims Form Classification Works

Automated classification transforms the chaotic first minutes of claim intake into instant, consistent decisions. Feed it your inbox (e.g., email attachments, fax images, portal uploads) and an AI agent can read every character, identify documents, and apply the same routing logic your most experienced clerk would use.

The system learns from corrections, so its judgment improves over time, delivering consistent triage even when claim volumes spike after a storm.

Document Identification and Data Extraction

AI agents start by identifying what's on the page. The system spots standard forms the moment key fields appear, distinguish them from other document types or auto FNOL, and flag supporting receipts in the same batch. Next, extraction logic pulls policy numbers, claimant names, dates, and loss descriptions.

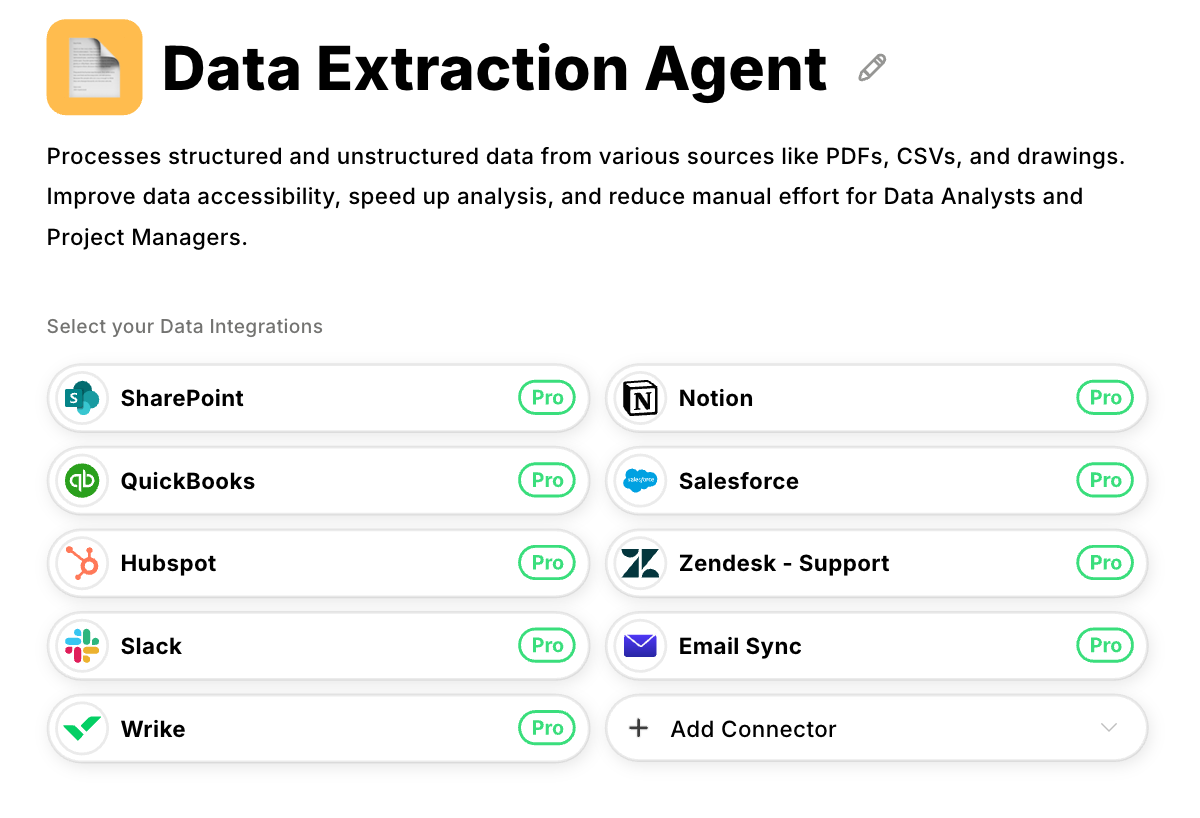

Datagrid's Data Extraction Agent handles structured PDFs and blurry phone photos through the same process, eliminating template maintenance headaches.

The classification layer tags line of business, cause of loss, severity, and urgency. It assigns confidence scores and decides whether claims can flow straight through or need human review. This removes subjective, error-prone handoffs that slow processing and create inconsistencies across your teams.

Connect AI Agents to Your Core Systems

AI agents can sit between intake and your core systems, acting as an intelligent router.

Upstream, they can ingest customer portals, monitored email boxes, fax servers, and EDI feeds without forcing channels to change their workflows.

Downstream, they can push clean metadata into your administration platform to open or update cases, call policy services for coverage checks, attach tagged documents in your content repository, and trigger workflow engines managing SLAs.

Analytics tools receive standardized labels for trend reporting, while exception queues surface low-confidence items for adjuster review. Start with a single intake channel and expand integration points gradually. No big-bang replacements required.

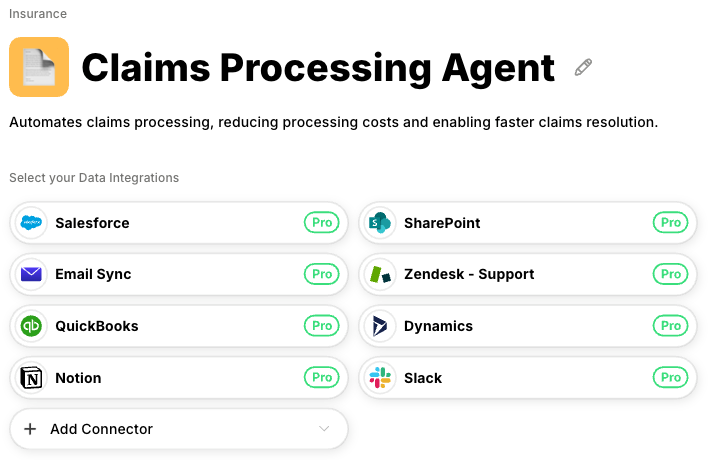

Datagrid's Claims Processing Agent automates the claims routing workflow by applying your business rules and capturing decisions for continuous improvement, while working in tandem with human oversight for complex or final decisions.

Build Your Implementation Plan

Approach implementation as a data workflow transformation that moves from manual bottlenecks to intelligent automation. You progress from mapping current inefficiencies to running self-improving systems, one controllable phase at a time.

Each phase (scoping, standardization, configuration, rollout) must deliver measurable improvements before advancing to the next.

Define Scope and Baseline Current State

Start by quantifying where manual processes create the biggest bottlenecks. Document volumes, error rates, and average handling time reveal which workflows need automation first. Claims arriving through email, fax, or web portals may appear similar, yet each channel carries different costs and quality challenges.

Catalog every document type flowing through your intake systems. Most operations handle a core set of dominant forms (medical bills, hospital claims, and proprietary first notice documents) plus supporting evidence that creates complexity.

Map these forms against current metrics including minutes of data entry per page, percentage of returns for incomplete information, and routing accuracy rates.

Document exactly where adjusters make line of business, cause of loss, or urgency decisions. These decision points become your automation requirements. Set concrete success targets so you can measure impact after deployment.

Standardize Taxonomies and Connect Systems

AI agents learn to recognize equivalent terms across your organization, but starting with consistent language accelerates training and reduces errors. Build a taxonomy that defines document types, claim categories, severity levels, and queue destinations without ambiguity.

When different regions classify identical auto accidents as "Auto-Minor" versus "Vehicle-Low Impact," AI agents can learn these equivalencies through corrections, but resolving inconsistencies upfront improves initial accuracy and reduces the training period.

Map the data elements that drive each decision. Severity scoring depends on claimed amounts, policy deductibles, and injury indicators. Make these fields mandatory across all intake channels.

Connect your data sources (inboxes, portals, and scanning systems) to a central pipeline that feeds classified documents into core platforms and content repositories.

Test the complete flow by submitting sample documents, verifying they reach the correct adjuster queues with accurate metadata, and confirming the pipeline handles expected volumes without data loss.

Configure and Validate the AI Agent

Collect representative samples of every document type and classify them with the precision your business rules demand. Train document recognition systems to identify structural elements like distinctive borders or shaded account blocks, then build extraction rules that capture policy numbers, loss dates, and diagnostic codes accurately.

Code your business logic into the system. Claims exceeding deductible thresholds with bodily injury indicators route to complex-loss teams. Straightforward windshield repairs flow directly to fast-track queues. Set confidence thresholds that automatically handle high-certainty items while routing ambiguous cases to exception queues with feedback mechanisms for continuous learning.

Datagrid's Claims Processing Agent enforces these thresholds while capturing every adjuster correction as new training data, improving accuracy without additional engineering overhead.

Test against historical claim data before handling live customer documents. Run holdout validation using past claims to surface edge cases (handwritten forms, multi-document submissions) that require additional training data or specialized handling rules.

Pilot, Measure, and Expand

Deploy parallel workflows so teams can compare automated decisions against manual processes directly. Focus your pilot on a single region or product line to control change management while generating statistically valid performance data.

Track routing accuracy, handling time, and exception volumes against your baseline metrics. Schedule weekly checkpoints during the first month to adjust confidence thresholds and refine business rules without disrupting daily operations.

As accuracy stabilizes above your success targets, expand systematically by adding new document types, integrating additional intake channels, and increasing volumes. Each expansion follows the same pattern (scope, standardize, configure, validate), ensuring predictable results.

Document lessons learned in an implementation playbook so subsequent rollouts deliver faster deployment, lower costs, and higher accuracy than previous phases.

Maintain Governance and Risk Controls

Document routing accuracy directly impacts processing costs and compliance risk. Establish governance practices that maintain accuracy while enabling continuous improvement:

- Build a cross-functional team from operations, compliance, IT, and data science to own standards, define taxonomy, set accuracy targets, and determine when low-confidence documents require human review

- Log every decision including incoming document, model version, confidence score, and human overrides to create audit trails that satisfy regulators and enable root cause analysis when problems surface

- Monitor precision, recall, and exception volume by document class, triggering retraining when metrics drift beyond thresholds instead of waiting for complaints

- Implement continuous sampling and feedback rather than one-off testing, maintaining vigilance for missed personal data due to regulatory risk

- Set context-specific accuracy thresholds where false positives (routing a property claim to auto instead of commercial) might be acceptable if they maintain sufficient recall rates

- Link model performance to business impact through metrics like time savings, cost reduction, and compliance risk rather than abstract accuracy percentages

Clear ownership prevents the finger-pointing that kills automation programs, while continuous monitoring ensures the system adapts to new products, regulations, and market conditions.

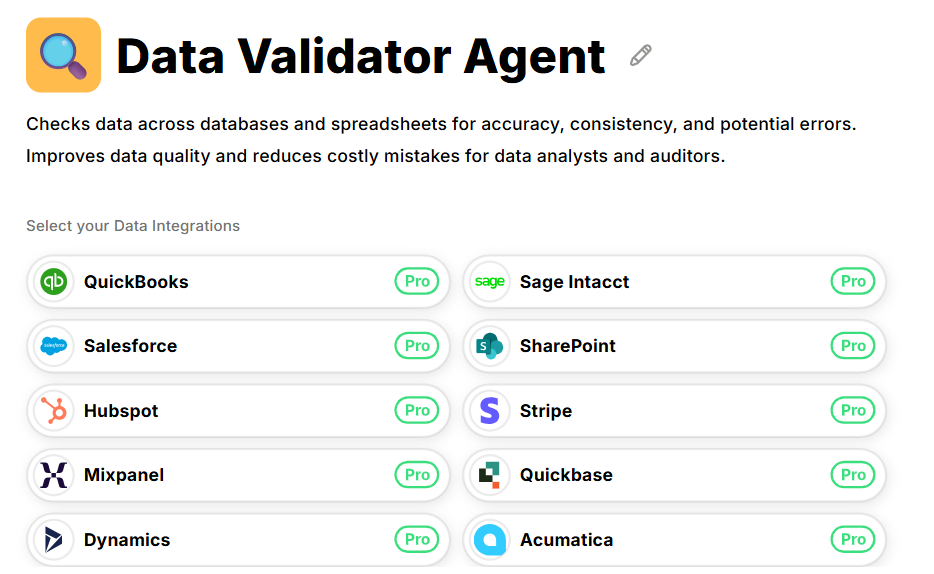

Datagrid's Data Validator Agent independently re-scores processed items and flags anomalies before they reach downstream systems. Discrepancies feed back to your governance team for rule corrections or model retraining without production disruption.

Create transparency throughout the organization. Dashboards showing handling time, misroute rates, straight-through processing percentages, and ROI keep executives engaged and give adjusters confidence the system works fairly. Regular governance reviews turn these insights into action, ensuring your system adapts to new products, regulations, and market conditions.

Prepare Your Claims Teams for Change

Technology fails when the people using it feel sidelined. Automated processing restructures how work flows from the moment a document arrives, moving judgment calls from experienced adjusters into algorithms. The mailroom clerk who sorted forms and the adjuster who spent mornings categorizing storm claims worry their expertise becomes irrelevant.

Prepare your team for successful adoption through these steps:

- Address concerns directly in team meetings. Explain that routine sorting disappears, but high-stakes investigation, negotiation, and customer resolution become their primary focus.

- Redefine success metrics before deployment. Replace "claims sorted per hour" with measures that value expertise (first-pass resolution rates, customer satisfaction scores, and complex case handling quality).

- Deploy transparent dashboards showing accuracy, misroute percentages, and queue volumes. When adjusters see model performance data and understand where human judgment remains essential, concerns about opaque automation diminish.

- Run shadow mode deployment to build confidence. Operate the system alongside manual processes for several weeks, comparing routing decisions side-by-side. Teams correct misrouted items in real time, generating labeled feedback that improves model accuracy. This approach prioritizes user acceptance alongside technical performance.

- Provide intensive post-deployment support. Designate regional champions with direct access to technical teams and offer intensive support during the first month when anyone can ask, "Why did this bodily injury claim route to property damage?"

- Celebrate measurable wins like cleared backlogs after catastrophe events or veteran adjusters mentoring new hires instead of keying form codes, then conduct regular retrospectives to incorporate lessons learned.

Datagrid's Claims Processing Agent uses AI to automate document routing and classification while maintaining human oversight for complex decisions. This creates a partnership between human expertise and automated processing rather than replacement, turning deployment into an ongoing improvement process that enhances both efficiency and job satisfaction while maintaining the quality that customers expect.

Automate Insurance Claims Classification with Datagrid

Datagrid's AI agents transform claims intake from a manual bottleneck into an automated workflow that scales with your volume:

- Document extraction across formats: The Data Extraction Agent processes structured PDFs, fax images, and phone photos through the same pipeline, eliminating the template maintenance and format-specific handling that slows manual intake.

- Intelligent routing with your business rules: The Claims Processing Agent applies your configured logic for line of business, severity, and urgency decisions, routing straightforward claims to fast-track queues while surfacing complex cases for adjuster review.

- Continuous accuracy improvement: Every adjuster correction feeds back into the system as training data, improving classification accuracy over time without requiring additional engineering effort or manual retraining cycles.

- Built-in validation and governance: The Data Validator Agent independently checks processed claims and flags discrepancies before they reach downstream systems, maintaining audit trails and catching drift before it impacts compliance.

- Integration with existing platforms: AI agents connect to your core claims systems, content repositories, and workflow engines without requiring platform replacement, allowing phased implementation that delivers value at each stage.

Create your free Datagrid account to see how AI agents can eliminate classification bottlenecks in your claims operation.